AI That KnowsYour Business

Build AI systems that answer questions using your actual data—not just generic training. We develop RAG (Retrieval-Augmented Generation) systems that connect LLMs to your documents, databases, and knowledge bases with proper citations and access control.

Featured Work

RAG in 2026

The foundation for enterprise AI that's accurate and trustworthy.

$10B

Market by 2030

63%

Use GPT Models

80%

Standard Frameworks

#1

Enterprise Use Case

RAG System Components

End-to-end retrieval-augmented generation development.

Document Ingestion & Processing

Ingest PDFs, Word docs, wikis, Notion, Confluence, and databases. Intelligent chunking and metadata extraction for optimal retrieval.

Vector Database Setup

Deploy and optimize vector stores—Pinecone, Weaviate, Qdrant, or pgvector. Hybrid search combining semantic and keyword matching.

Embedding & Retrieval Pipeline

Custom embedding models tuned to your domain. Reranking for relevance. Sub-second retrieval at enterprise scale.

Access Control & Citations

Role-based document access. Every AI answer includes source citations. Audit trails for compliance.

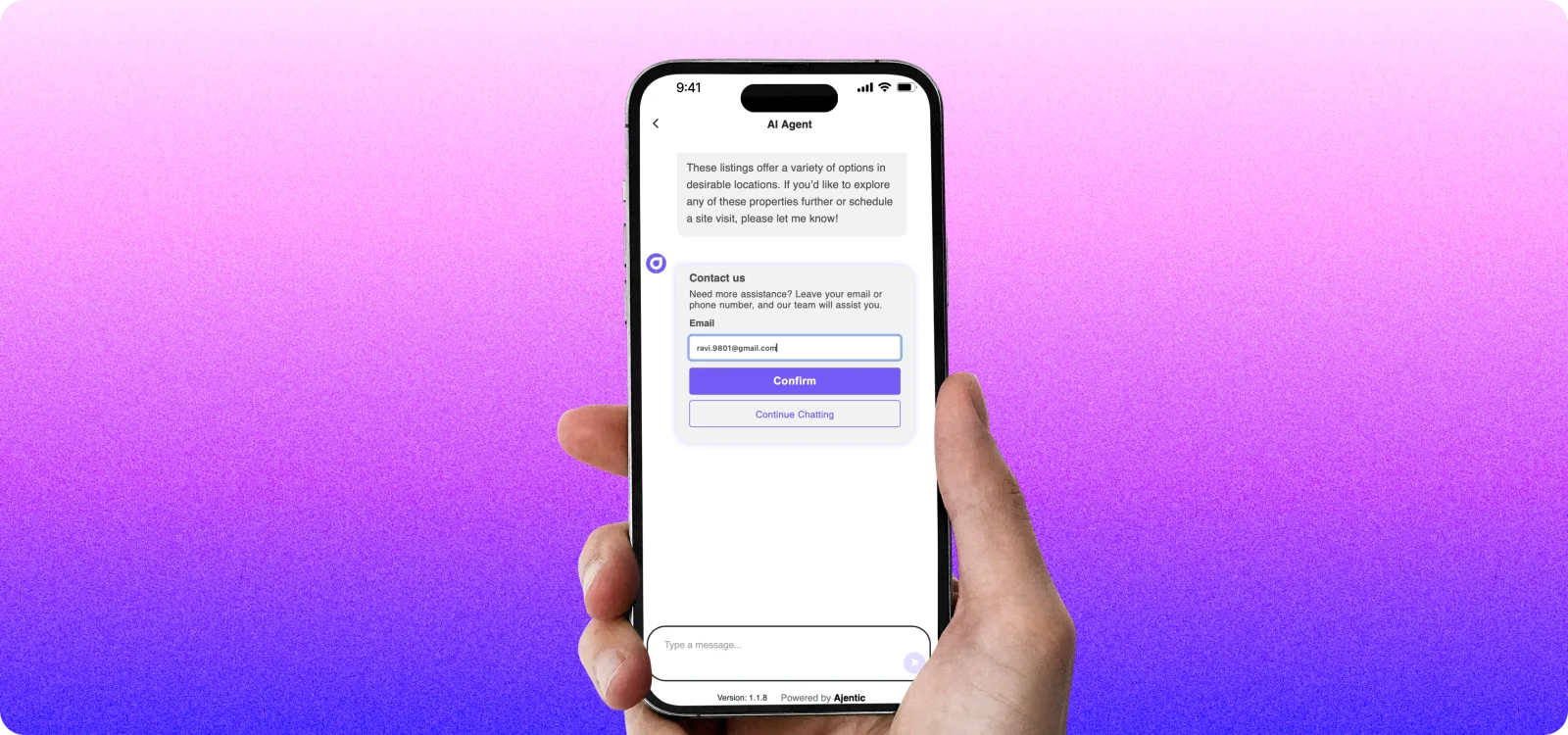

Conversational Interface

Natural language Q&A over your knowledge base. Context-aware follow-up questions. Slack, Teams, or custom UI integration.

Continuous Learning

Feedback loops to improve retrieval quality. Analytics on query patterns. Automatic re-indexing when documents update.

Why Companies in enterprises Choose Us

Global timezone overlap for seamless, real-time collaboration with your team.

Frequently Asked Questions

What is RAG and why does it matter?

RAG (Retrieval-Augmented Generation) connects LLMs like GPT-4 to your actual data before generating answers. Without RAG, AI can only use its training data and often "hallucinates" facts. With RAG, AI retrieves relevant documents from your knowledge base first, then generates answers based on that real information—with citations you can verify.

What types of data can RAG systems use?

Almost anything text-based: PDFs, Word documents, Confluence/Notion wikis, Slack history, emails, database records, API responses, and more. We handle ingestion, chunking, and indexing. Some clients also include structured data like product catalogs or customer records.

How accurate are RAG systems compared to standard ChatGPT?

Significantly more accurate for your specific domain. Standard ChatGPT has no knowledge of your internal data and may confidently provide incorrect information. A well-built RAG system retrieves real documents and cites sources—reducing hallucinations and enabling verification. Accuracy depends on data quality and retrieval tuning.

How do you handle sensitive or confidential documents?

We implement role-based access control at the retrieval layer. Users only get answers from documents they're authorized to see. We can deploy on your infrastructure (private cloud or on-premise) for maximum data security, or use encrypted cloud solutions with proper access controls.

What does a RAG project typically involve?

A typical project includes: (1) data audit and ingestion pipeline, (2) vector database setup and optimization, (3) retrieval tuning and reranking, (4) LLM integration with prompt engineering, (5) user interface (chat, API, or embedded), and (6) evaluation and monitoring. Timeline is typically 6-12 weeks depending on data complexity.

Where is your team based?

Our team is based in India, which allows us to offer competitive rates while maintaining high quality. We work with clients globally and provide flexible timezone coverage for collaboration.