DeepSeek has rapidly emerged as a formidable AI chatbot platform from China, symbolized by its blue whale logo. In early 2025, DeepSeek’s mobile app rocketed to the top of the U.S. iOS App Store, even surpassing OpenAI’s ChatGPT as the most-downloaded free appen.wikipedia.org. This stunning rise sent shock waves through the tech industry – Nvidia’s stock plunged nearly 18% (erasing hundreds of billions in market value) on the news of DeepSeek’s debut dallasnews.com. But DeepSeek’s success is far from a fluke. It reflects breakthrough approaches to artificial intelligence that achieve state-of-the-art performance at a fraction of the usual cost and computing power scientificamerican.comscientificamerican.com. Let’s dive into what DeepSeek is, how it works, and why it’s reshaping the global AI landscape.

What is DeepSeek?

DeepSeek is a Chinese artificial intelligence startup focused on building advanced large language models (LLMs). The company was founded in 2023 as a spin-off from a quantitative hedge fund named High-Flyer, based in Hangzhou, China en.wikipedia.org. In fact, DeepSeek’s origin story is unusual: it began with a group of mathematically inclined stock traders who initially aimed to use AI for hedge-fund trading strategies qlarant.com. When that trading venture didn’t take off, founder Liang Wenfeng (who also co-founded High-Flyer) pivoted the team’s deep math and computing expertise toward general-purpose AI models qlarant.com. Backed primarily by the hedge fund (rather than Big Tech companies), DeepSeek set out with a research-first mentality, prioritizing long-term technological advancement over quick commercialization wired.comwired.com.

DeepSeek launched its first LLMs in late 2023, and quickly iterated on them. Its early DeepSeek-V2 model (released in 2024) already performed near ChatGPT’s level but at a much lower cost qlarant.com. The real breakthrough came with DeepSeek-R1, unveiled in January 2025 alongside an eponymous chatbot app en.wikipedia.org. Within weeks, DeepSeek-R1 stunned observers by matching the capabilities of the best U.S. models on key benchmarks – yet it was trained under severe hardware constraints and budget limits. Notably, the company claims it trained its flagship model for under $6 million, compared to an estimated $100+ million that OpenAI spent on GPT-4’s training scientificamerican.com. DeepSeek achieved this on roughly 2,000 Nvidia GPUs (using export-compliant H800 chips) over ~55 days en.wikipedia.orgscientificamerican.com – an astonishing feat of efficiency when rivals traditionally use tens of thousands of top-tier GPUs. This background sets the stage for why DeepSeek is drawing global attention as a potential game-changer in AI.

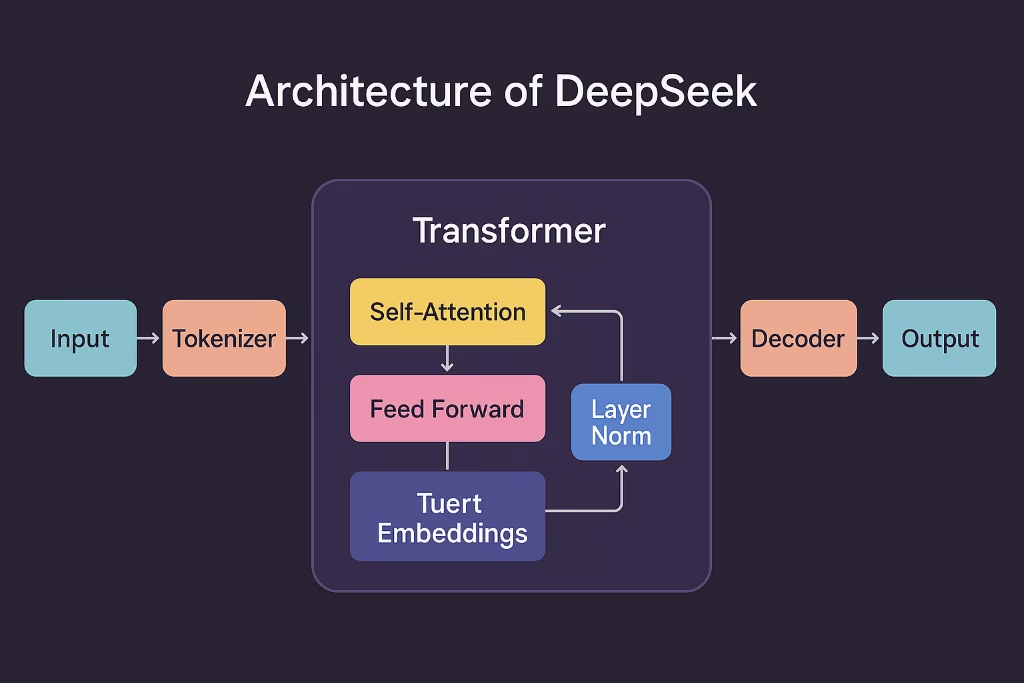

Under the Hood: DeepSeek’s Architecture and Tech Stack

At the core of DeepSeek’s power is an innovative Mixture-of-Experts (MoE) architecture in its models. DeepSeek’s latest text model, DeepSeek V3, is a massive 671 billion-parameter LLM – making it one of the largest AI models ever scientificamerican.com. However, unlike a traditional dense model where all parameters are active for every token, DeepSeek V3’s MoE design means only a subset (“experts”) are engaged per query. In practice, only about 37 billion parameters (the relevant experts) are activated for each token generation, rather than all 671B encord.comscientificamerican.com. This dramatically reduces computation without sacrificing capability. As science author Anil Ananthaswamy explains, MoE allows DeepSeek to tap tens of billions of parameters for a given question instead of hundreds of billions, cutting down cost while retaining accuracy scientificamerican.com.

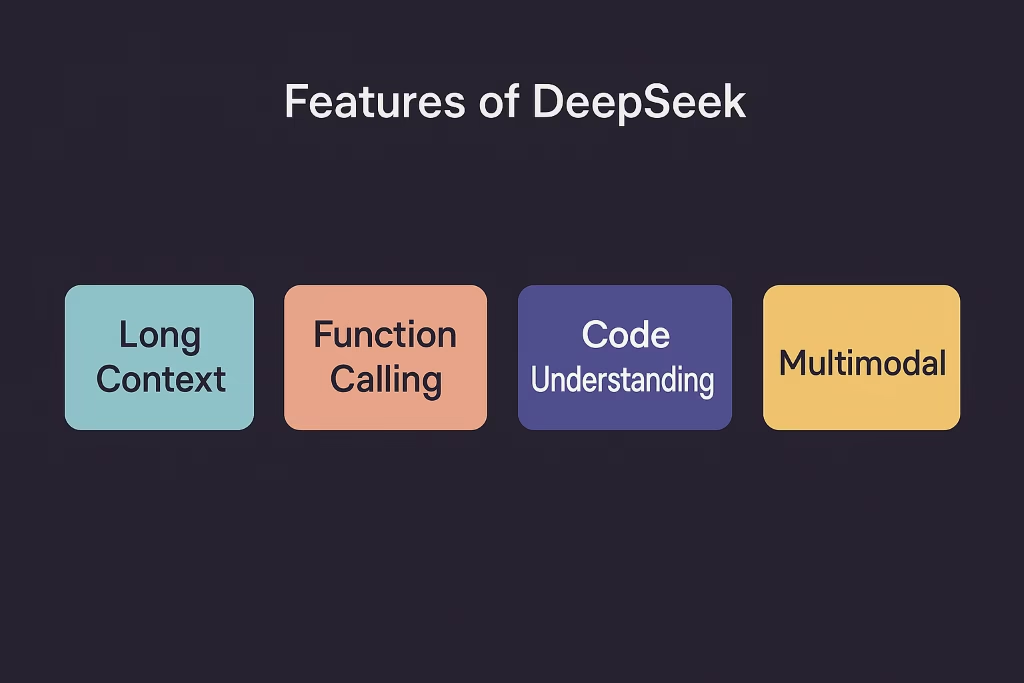

DeepSeek’s architects coupled MoE with other advanced optimizations. The model uses Multi-Head Latent Attention (MLA) – a technique to make the transformer’s attention mechanism faster and more memory-efficient encord.comscientificamerican.com. It also employs Multi-Token Prediction, generating several words in parallel instead of one-by-one, which speeds up inference throughput scientificamerican.comscientificamerican.com. Under the hood, DeepSeek implemented extensive low-level engineering (custom GPU communication schemes, mixed-precision arithmetic, etc.) to maximize training efficiency on limited hardware wired.comwired.com. Impressively, these efforts paid off: training DeepSeek’s latest model required only about one-tenth the computing power that Meta reportedly used for a comparable LLaMA 3 model en.wikipedia.orgwired.com. The model also boasts an extended 128,000-token context window, far surpassing most competitors and enabling it to handle very long documents or dialogues encord.com. Finally, DeepSeek trained on a massive 14.8 trillion-token multilingual dataset (with a slight emphasis on Chinese over English), including an extra focus on math and programming data encord.com. This broad and balanced training corpus, combined with fine-tuning via instructions and feedback, gave the model robust capabilities across languages and domains.

One especially novel aspect of DeepSeek’s tech stack is how it approaches reasoning and learning. Its newest model, DeepSeek-R1, was largely trained through reinforcement learning rather than heavy supervised fine-tuning. The team started with “DeepSeek-R1-Zero,” an initial model that learned purely via reinforcement signals (no human-labeled instruction tuning) encord.com. Through this process, the model organically developed advanced reasoning behaviors – it learned to self-verify, reflect, and follow chain-of-thought logic when solving problems encord.comencord.com. DeepSeek’s engineers used a rule-based reward system (instead of the usual learned reward model) to guide R1’s training qlarant.comscientificamerican.com. In essence, the model was programmed with rules to check its answers (e.g. verifying a math solution or running unit tests on code) and to reward itself for correct outcomes qlarant.comqlarant.com. This approach allowed DeepSeek-R1 to “reason” out solutions and even change its mind mid-problem to try alternate approaches – something human feedback loops usually encourage, but here achieved without an army of human annotators qlarant.comqlarant.com. After this pure RL phase, DeepSeek did incorporate some supervised fine-tuning (with writing and Q&A data) to improve R1’s general helpfulness and reduce issues like messy formatting encord.com. The result is a model that combines clever self-evolved reasoning skills with polished language abilities.

Key Features That Set DeepSeek Apart

Why are industry watchers calling DeepSeek a potential “upending” force in AI? Here are some of DeepSeek’s stand-out features and selling points that distinguish it in the market:

Open “Weight” Model : Unlike most leading AI models which are closed-source, DeepSeek has released its model weights and code under a permissive license (MIT) en.wikipedia.orgen.wikipedia.org. This means enterprises and researchers can freely download and run DeepSeek models on their own hardware, and even fine-tune them, without relying on a black-box API. (The only thing not openly released is the proprietary training data.) This “open-weight” approach fosters transparency and trust – external experts can verify DeepSeek’s claims and build on its models scientificamerican.com. It has led to a vibrant community: thousands of developers have already forked or adapted DeepSeek’s models on GitHub and Hugging Face qlarant.comtechcrunch.com.

Cost Efficiency & Scalability : DeepSeek delivers high-end performance at a fraction of the cost. Its architects achieved cutting-edge GPT-4-level abilities by spending around $5–6 million, dramatically undercutting the nine-figure budgets of OpenAI and others scientificamerican.com. Thanks to the MoE design and training optimizations, running DeepSeek is significantly cheaper in terms of GPU resources and energy. Estimates suggest DeepSeek-R1 can be operated at about one-tenth the running cost of similar competitor models scientificamerican.com. For enterprises, this cost efficiency could translate into more affordable AI services or the ability to self-host powerful models without an astronomical infrastructure spend.

Extremely Long Context and Multimodality: With support for up to 128K tokens of context encord.com, DeepSeek can ingest and analyze long documents, contracts, or conversations in one go – a valuable feature for enterprise applications dealing with lengthy texts. Additionally, the company has developed multimodal capabilities (e.g. the Janus model) that handle both image understanding and generation within a unified AI system encord.com. This means DeepSeek’s technology can power not only chat and text automation, but also AI vision tasks (like describing images or creating illustrations) under one platform.

Advanced Reasoning and Reliability : DeepSeek’s R1 model has a unique strength in complex reasoning tasks such as math, science, and programming. By using reinforcement learning and chain-of-thought techniques, R1 effectively fact-checks itself and shows its work when solving problems techcrunch.comencord.com. This leads to more reliable answers in domains that normally trip up AI. It may take a bit longer to generate a solution (since the model is “thinking through” the steps), but the answers tend to be more accurate and trustworthy – a critical advantage for enterprises using AI in high-stakes scenarios.

Transparent Problem-Solving : DeepSeek’s chatbot front-end actually lets users see the model’s step-by-step reasoning for a given prompt dallasnews.com. For example, if you ask a complex question, DeepSeek might display the intermediate logic or calculation it uses to arrive at the final answer. Users and developers have praised this transparency dallasnews.com. For decision-makers, this feature can inspire greater confidence in AI outputs, as the model isn’t a complete “black box” – you can audit how it derived an answer, which is useful for compliance and debugging.

Competitive Pricing and Access : DeepSeek has adopted an aggressive go-to-market strategy by keeping usage free or low-cost to attract users. Its consumer chatbot app is free with unlimited queries en.wikipedia.org. For enterprise integrations, DeepSeek offers API access priced around $0.55 per million input tokens and $2.19 per million output tokens as of early 2025 en.wikipedia.org. These rates are orders of magnitude cheaper than many Western AI API services (for context, OpenAI’s GPT-4 API has cost around $30–$60 per million tokens). Such a low price point – effectively pennies for massive volumes of text – has forced competitors (including Chinese tech giants like Alibaba and ByteDance) to slash their own AI pricing or even offer models for free to keep up techcrunch.comtechcrunch.com. For enterprises, DeepSeek’s pricing could significantly lower the barrier to deploying advanced AI solutions at scale.

In summary, DeepSeek distinguishes itself through openness, efficiency, strong reasoning abilities, and a disruptive pricing model. These features collectively position it as a compelling alternative to the incumbent AI offerings, especially for global enterprises seeking cost-effective and customizable AI.

DeepSeek vs. GPT-4, Claude, and LLaMA: How It Stacks Up

DeepSeek’s rise naturally invites comparisons with the major American AI models. How does this Chinese upstart lead, lag, or innovate differently versus OpenAI’s GPT-4, Anthropic’s Claude, or Meta’s LLaMA? Below is a brief showdown:

Performance and Intelligence : In many standardized benchmarks, DeepSeek’s models are neck-and-neck with top U.S. models. Internal and third-party tests showed DeepSeek-R1 matching OpenAI’s latest models (GPT-4/o1) on math, coding, and reasoning challenges techcrunch.comscientificamerican.com. For instance, R1 achieved similar scores to OpenAI’s “o1” model on tough coding problems and math quizzes scientificamerican.com. In areas like formal mathematics and logic puzzles, DeepSeek may even have an edge, thanks to its chain-of-thought training. On general knowledge and creative writing, GPT-4 and Claude are still regarded as slightly superior in fine-grained quality – but the gap has narrowed drastically. Claude 2, for example, excels at very lengthy interactive dialogues with its 100K context; DeepSeek V3 matches that with 128K context and provides strong long-form understanding encord.com. Meta’s open LLaMA models (e.g. LLaMA-2 70B) are outperformed by DeepSeek V3 (671B MoE) on most benchmarks; DeepSeek simply throws more (efficiently used) parameters and training data at the problem, yielding higher accuracy techcrunch.com. In short, DeepSeek has proven it can play in the same league as GPT-4 and Claude in terms of raw capability, despite its newcomer status.

Architectural Approach : OpenAI and Anthropic have kept their model architectures proprietary, but it’s believed GPT-4 and Claude are large dense transformer models (with ~100B+ parameters, trained on massive data) and rely heavily on human feedback fine-tuning. DeepSeek took a different route with its Mixture-of-Experts architecture and heavy reliance on self-training via RL. This gives DeepSeek some efficiency advantages – it doesn’t use all its weights at once and it learned to “think” with minimal human intervention. The result is a leaner training pipeline: DeepSeek trained R1 with no large-scale human feedback model in the loop, whereas OpenAI’s GPT-4 famously used extensive RLHF with human labelers. DeepSeek’s rule-based reinforcement strategy is a novel experiment that the likes of GPT-4 and Claude haven’t openly employed scientificamerican.com. Meanwhile, Meta’s LLaMA models are open-source like DeepSeek, but they are fully dense and smaller (7B–70B params for LLaMA2). LLaMA’s open releases helped the community, but DeepSeek’s open 671B MoE model provided an unprecedented level of access to a truly top-tier model. In essence, DeepSeek’s architecture is more cutting-edge in efficiency(MoE, multi-token generation) compared to the dense models of GPT-4/Claude, yet it’s more scalable and powerful (in parameter count and context length) than previous open models like LLaMA.

Key Strengths : Each model has its fortes. GPT-4 is known for its broad general knowledge, fluent creative writing, and strong coding ability; it’s the gold standard for many. Claude (Anthropic) is lauded for its alignment and safety – it’s less likely to produce harmful or disallowed content and can handle very long dialogues gracefully. DeepSeekshines in reasoning-intensive tasks – users note it is less prone to math mistakes and can break down complex problems methodically techcrunch.com. It also has transparency on its side (showing reasoning steps), which neither GPT-4 nor Claude do by default. In terms of context length, DeepSeek V3 actually leapfrogs GPT-4’s 32K token limit with its 128K support, and competes with Claude’s long context. Meta’s LLaMA models, being smaller, are faster and easier to run on limited hardware; they became popular for private offline usage. DeepSeek partially addressed that by releasing distilled smaller versions of R1 (e.g. a 32B model distilled into Alibaba’s Qwen architecture) to cater to those who need lighter models encord.comencord.com.

Current Limitations : One area where DeepSeek lags is in content guardrails and Western context understanding. Because it must comply with Chinese regulations, DeepSeek’s chatbot will refuse or deflect questions on certain political or sensitive topics (e.g. Tiananmen Square, Taiwan’s status) dallasnews.comtechcrunch.com. GPT-4 and Claude, on the other hand, have their own content moderation, but it’s generally focused on hate, violence, etc., rather than political censorship. This difference can be jarring for international users of DeepSeek’s model. Additionally, DeepSeek’s English fluency and cultural knowledge, while very strong, may occasionally be a notch below GPT-4’s, given OpenAI’s vast Western training data. And importantly, GPT-4 benefits from continuous updates via OpenAI’s research and plugin ecosystem, whereas DeepSeek (as of 2025) is a fast-moving open project but not yet as integrated into products. Meta’s LLaMA models, being open, compete with DeepSeek in the open-source arena, but Meta’s models (like LLaMA-2) currently have lower raw performance – they were not top-of-class on many benchmarks that DeepSeek V3 now dominates encord.com.

Overall, DeepSeek has proven itself a viable rival to the best American models. It leads in efficiency and openness, roughly ties on raw performance in many tasks, but may trail in some areas of polish, safety, and integration. For global enterprises, DeepSeek offers a compelling third option – beyond Big Tech’s closed models and the smaller open models – combining high performance with open access.

Geopolitics, Trust, and AI: The Significance of DeepSeek

Beyond the tech specs, DeepSeek’s rise carries major geopolitical and enterprise implications. It has quickly become a flashpoint in the U.S.–China AI competition. For years, the U.S. dominated cutting-edge AI via companies like OpenAI, Google, and Meta. DeepSeek’s emergence as a Chinese model matching Western capabilities is seen as a national triumph for China and a potential challenge to U.S. tech leadership en.wikipedia.orgen.wikipedia.org. Chinese media and experts have lauded DeepSeek as evidence that innovation can thrive despite U.S. export bans on advanced chips – indeed, DeepSeek trained R1 on sanctioned hardware, undermining assumptions behind the export controls thedailystar.netthedailystar.net. Some have even dubbed DeepSeek’s breakthrough as kicking off a “global AI space race” reminiscent of the Cold War eraen.wikipedia.org.

However, with success has come scrutiny and mistrust. Western governments worry that using a Chinese AI service could expose them to security risks or propaganda. Notably, DeepSeek’s compliance with Chinese government censorship rules has raised red flags about data sovereignty and freedom of information en.wikipedia.orgdallasnews.com. The model will avoid answering questions that conflict with Beijing’s “core socialist values,” and its apps collect user data on Chinese servers – facts not lost on foreign regulators techcrunch.comen.wikipedia.org. By March 2025, the U.S. Commerce Department reportedly banned DeepSeek from all its staff devices techcrunch.com. A coalition of U.S. state attorneys general likewise urged banning the app in government settings reuters.com. American officials, including OpenAI’s CEO Sam Altman, have publicly characterized DeepSeek as possibly “state-subsidized” or “state-controlled,” calling for restrictions on its use techcrunch.com. U.S. lawmakers even floated bills to bar DeepSeek outright on government networksreuters.com. Allied nations have followed suit: countries like Australia, Taiwan, and South Korea swiftly blocked DeepSeek on official devices, citing national security concerns reuters.comreuters.com. This echoes the treatment of other Chinese tech (like Huawei or TikTok) – a reflection of geopolitical tensions playing out in AI.

For global enterprises, this geopolitical shadow means due diligence is crucial before embracing DeepSeek. Many Western companies are cautious; for example, Microsoft’s internal policy prohibits employees from using DeepSeek over data security and influence concerns techcrunch.com. On the other hand, Microsoft has made DeepSeek’s model available to Azure cloud customers as an option, suggesting a nuanced approach – enterprises can experiment with DeepSeek in a controlled environment, but perhaps not integrate it into core sensitive workflows yet techcrunch.com. Companies must weigh the benefits of DeepSeek’s tech (performance, cost, flexibility) against possible risks (regulatory backlash, data governance issues). In regions like Europe with strict data privacy laws, using DeepSeek via its Chinese servers could raise GDPR questions. The flip side is data sovereignty for non-U.S. users: some governments and businesses outside the West prefer not to depend on American AI providers. DeepSeek, being open and self-hostable, offers them an AI they can deploy on-premises – keeping data within their own jurisdiction. For instance, a finance firm in Europe or India could take DeepSeek’s open model and run it on local cloud infrastructure, avoiding sending data to U.S. or Chinese data centers. This ability to “bring the model to your data” is highly attractive for sectors with compliance requirements.

Finally, DeepSeek’s ascent is accelerating conversations about AI regulation and collaboration. Its case shows how quickly a newcomer can disrupt the status quo, which might encourage regulators to develop internationally coordinated strategies (to manage AI safety without stifling innovation across borders). It also highlights the need for trust and transparency in AI: DeepSeek chose to open its model precisely to build goodwill and a developer community worldwide wired.comwired.com. That move has won it praise among researchers (many academics are excited that such a powerful model is now accessible for research scientificamerican.comscientificamerican.com). If enterprises and governments can validate DeepSeek’s code and control its deployment, some trust barriers may be overcome. In fact, DeepSeek’s example might push U.S. companies to consider opening up their own models more (to avoid being eclipsed by open alternatives).

Conclusion

DeepSeek’s story – from a hedge fund’s side project to a top-ranked AI app in the U.S. – reads almost like modern tech folklore. But it’s reality, and it signals a broader shift in the AI world. By marrying cutting-edge AI prowess with openness and efficiency, DeepSeek has demonstrated that innovation is not the sole province of Silicon Valley, and that bigger isn’t always better in AI (sometimes smarter is better). For decision-makers in global enterprises, DeepSeek offers both an opportunity and a dilemma. On one hand, it promises GPT-4-like capabilities at a fraction of the cost, with the freedom to customize and self-host an AI model unshackled from a single vendor scientificamerican.comqlarant.com. It could power new products and insights without breaking the bank. On the other hand, its Chinese origin and the surrounding geopolitical frictions mean companies must tread carefully – ensuring that using DeepSeek aligns with their security standards and regulatory environment.

In the coming years, we can expect the “DeepSeek effect” to drive more competition and innovation in AI. Established players will aim to prove that their higher costs and closed models are justified by superior quality or safety. Meanwhile, open-source and international models will strive to catch up and differentiate (as DeepSeek has by focusing on reasoning and efficiency). For enterprises, the key takeaway is that the AI landscape is rapidly diversifying. It’s wise to stay informed about newcomers like DeepSeek, evaluate them on technical merit, and have strategies for multi-model use to avoid over-reliance on any single provider or country. DeepSeek has opened executives’ eyes to the fact that the next AI breakthrough might come from an unexpected corner of the world – and it might just change the way we all do business with AI.

Sources: The information in this article is drawn from a range of reports and analyses on DeepSeek, including news from Wired, Scientific American, TechCrunch, and academic/technical briefs. Key references include descriptions of DeepSeek’s architecture and training methods scientificamerican.comqlarant.com, performance benchmarks comparing it to GPT-4 and others techcrunch.comscientificamerican.com, and commentary on the geopolitical and regulatory responses to DeepSeek’s rise dallasnews.comtechcrunch.com. These sources collectively paint a detailed picture of DeepSeek as of mid-2025 – a rising AI contender making waves far beyond China’s borders.